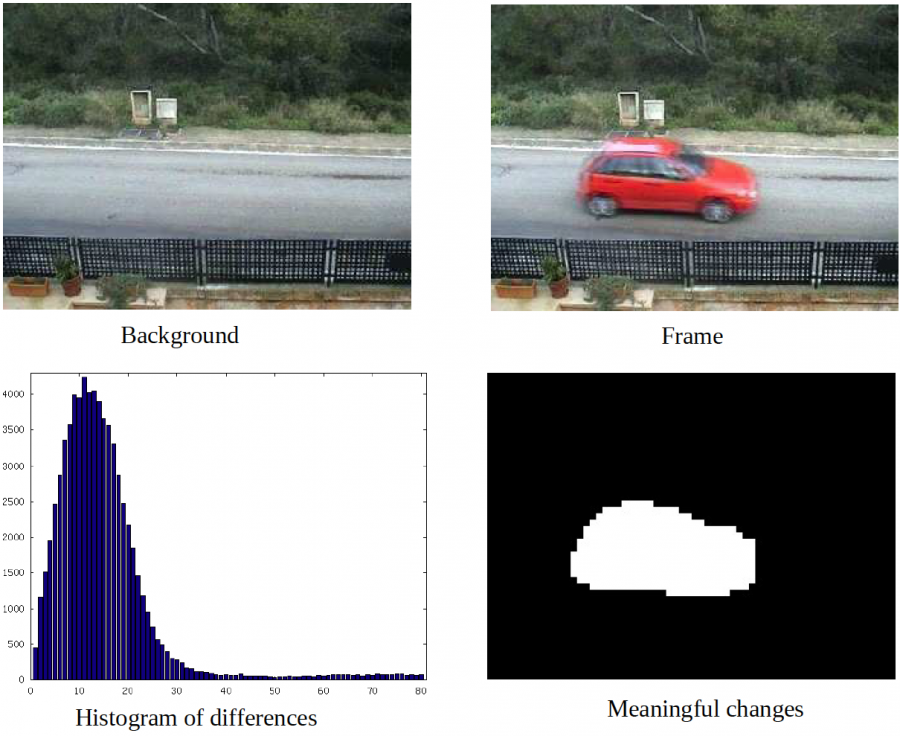

In [1] we proposed a method to detect meaningful changes between two images. Meaningful changes are those due to variations in the visual content of the images, rejecting illumination changes as non-meaningful. The method was devised in order to assure a low number of wrong detections and depends on a few parameters that can be tuned once and for all for any input images, which makes the method virtually automatic.

The method is illustrated by the following images. The background of the scene is assumed to be known (or it can be computed from the scene itself). Every frame is compared to this background. The comparison is performed block-wise, and the histogram of differences between the average intensity values of corresponding blocks in background and frame are computed. Only those blocks whose difference is above a threshold are tagged as “meaningfully changed”. The threshold of detection is adapted to the distribution of intensity differences, in such a way that the rate of false detections is bounded.

In [2] we developped a system that organized the detected changes and tracked them along the sequence, thus permitting an indexing of all the moving objects in the scene.

[1] A. Buades, J.L. Lisani, L. Rudin, Adaptive Change Detection, 16th International Conference on Systems, Signals and Image Processing, 2009. DOI: 10.1109/IWSSIP.2009.5367788

[2] A. Buades, J.L. Lisani, L. Rudin, Fast video search and indexing for video surveillance applications with optimally controlled False Alarm Rates, IEEE International Conference on Multimedia and Expo (ICME), 2011. DOI: 10.1109/ICME.2011.6012151